One of the biggest challenges in using Large Language Models (LLMs) in real-world applications is keeping them accurate, efficient, and predictable—especially when they’re interfacing with structured systems and business processes.

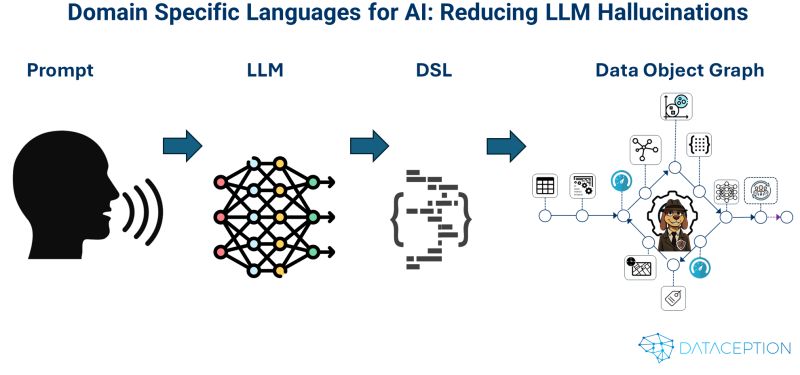

At Dataception, we’ve found that introducing Domain Specific Languages (DSLs) as an intermediate layer between the LLM and execution engines has been a game-changer. Not only does it reduce hallucinations, it also improves performance and trust in AI systems.

Let’s break down why this approach matters—and how it works in practice.

The Problem with Traditional LLM Outputs

When you ask an LLM to output structured formats like JSON or deeply nested schemas, you’re immediately dealing with three major problems:

Token Bloat:

Verbose formats consume excessive tokens, increasing latency and inference costs while shrinking the effective context window.Error-Prone Complexity:

Maintaining consistency across complex data structures introduces risk. The more fields you ask the LLM to manage, the more likely it is to hallucinate or break the schema.Syntax Over Semantics:

LLMs often prioritise correct formatting over delivering the right meaning. If your schema changes slightly, the whole output can fall apart.

Enter: Domain Specific Languages (DSLs)

DSLs are lightweight, structured languages crafted for a specific domain or task. When used as an intermediary layer between natural language and backend logic, they offer several crucial benefits:

✅ Compression: Far more compact than JSON or XML, so they consume fewer tokens

✅ Clarity: Defined rules and syntax act as a roadmap for the LLM

✅ Error Reduction: Guardrails prevent drifting outside valid logic

✅ Maintainability: Easier to extend and adapt as requirements evolve

A great example is BAML, which illustrates how schema-aligned parsing can combine flexibility with structure, reducing hallucinations while preserving semantic integrity.

Why This Works: Guardrails for Meaning

DSLs shift the focus from language generation to language translation—helping LLMs convert messy human instructions into machine-understandable logic with precision.

This not only increases reliability and interpretability, but also allows you to squeeze more semantic value from every token. That’s a massive win in production environments where latency and cost are critical.

Real-World Use Case: Investment Analysis with DOGs

Let’s bring this to life with a real-world scenario from our own work.

We’ve been using DSLs to power a system that models investment portfolios as Data Object Graphs (DOGs)—a hybrid graph structure where some nodes hold data (like assets or fund entities) and others perform execution (like valuation or rebalancing operations).

When an analyst asks,

“What’s our exposure to oil & gas companies?”

a small, local LLM:

Translates that natural language query into a compact, DSL-based intermediate form

Converts that into Graph Query Language (GQL)

Runs it against a DOG, which calculates the answer dynamically across the connected financial network

This isn’t just about answering queries. It’s about interacting with living models that reflect the structure, behaviour, and calculations of real portfolios—on demand.

The Bigger Picture: Human Intention to Machine Execution

For engineers, product managers, and AI strategists, DSLs are the missing piece to bridge human requests with technical reality. They compress complexity, enforce discipline, and allow natural language to control structured systems in a reliable way.

Whether you're building AI copilots, agentic systems, or interactive data models, DSLs turn fragile prototypes into enterprise-grade tools.

With Dataception Ltd’s DOGs and DSL-powered AI, the future of intelligent automation isn’t just functional—it’s conversational, precise, and finally predictable.

Because in our world, AI isn’t just a tool. It’s a fluent, structured dialogue with your business logic.