Artificial intelligence is on the cusp of a new chapter: Reasoning Language Models (RLMs). These next-gen models don’t just predict patterns or rely on probabilistic outputs like traditional LLMs—they think.

Artificial intelligence is on the cusp of a new chapter: Reasoning Language Models (RLMs). These next-gen models don’t just predict patterns or rely on probabilistic outputs like traditional LLMs—they think.

RLMs represent a leap forward, incorporating structured, transparent reasoning processes that mimic human problem-solving. Instead of a single-pass black-box approach, RLMs explore multiple solution paths, verify their progress, and even backtrack if necessary, enabling them to tackle complex, multi-step problems with a new level of sophistication.

Why RLMs?

While traditional LLMs excel at generating fluent text and synthesizing from large datasets, they have their limits:

- Single-Pass Processing: Outputs are probabilistic guesses, often opaque and unexplainable.

- Pattern Dependence: Reliance on pattern matching from training data restricts them in novel or nuanced scenarios.

- Limited Multi-Step Reasoning: Struggle with tasks like mathematical proofs, scientific analysis, or complex planning.

RLMs address these gaps by introducing explicit reasoning paths, structured exploration, and systematic evaluation, all grounded in transparent logic.

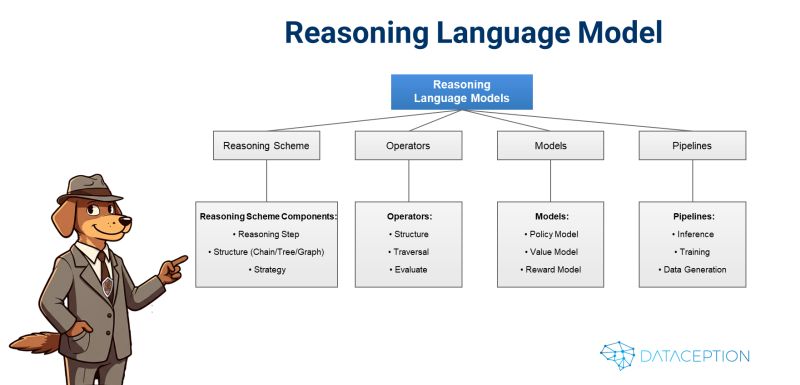

The Anatomy of RLMs

RLMs are built on a carefully designed framework with several key components:

1. Reasoning Scheme

The blueprint for how the model structures and develops its thinking process:

- Reasoning Step: The smallest unit of reasoning (e.g., a calculation or logical deduction).

- Reasoning Structure: How reasoning steps are organized—chains for linear logic, trees for branching paths, graphs for interconnected ideas.

- Reasoning Strategy: Adaptive evolution of the structure as the model explores solutions.

2. Operators

The tools that manipulate and refine reasoning paths:

- Structure Operators: Modify the reasoning structure (e.g., add/remove steps).

- Traversal Operators: Navigate through the reasoning path.

- Update Operators: Enhance specific parts of the reasoning process.

- Evaluate Operators: Assess the quality and validity of the reasoning.

3. Models

RLMs utilize different models for generating, evaluating, and rewarding reasoning:

- Policy Model: Generates the next reasoning step based on the current state.

- Value Model: Evaluates the effectiveness of a given state.

- Reward Model: Assesses the quality of each step, guiding improvements over time.

4. Pipelines

The workflows that power the RLMs:

- Inference: Executes reasoning in real-time for tasks.

- Training: Refines the model to improve reasoning performance.

- Data Generation: Creates training data tailored to multi-step reasoning tasks.

Key Advantages of RLMs

- Transparent Outputs: Unlike LLMs, RLMs provide a clear reasoning path, making their decisions more explainable.

- Multi-Step Capability: They excel in complex workflows requiring iterative problem-solving, like scientific research or financial modeling.

- Dynamic Problem Solving: By leveraging trees, chains, and graphs, RLMs adapt to the demands of each unique problem.

- Error Correction: Backtracking capabilities allow them to course-correct when errors are detected.

The Trade-Off: Capability vs. Efficiency

These benefits don’t come free. RLMs are computationally more expensive and architecturally complex than LLMs. Choosing between RLMs and traditional LLMs depends on your goals:

- For nuanced, high-assurance tasks: RLMs shine with their reasoning depth and reliability.

- For general-purpose tasks: LLMs remain the cost-effective choice.

Experimenting with the Future

At Dataception, we’ve been working on integrating RLM principles into our Data Object Graphs (DOGs) framework. By tying the reasoning components into the dynamic, interconnected architecture of DOGs, we believe we can develop a more efficient model without requiring billions of parameters.

Imagine a system where reasoning doesn’t just produce results—it evolves dynamically, adjusts to feedback, and integrates seamlessly into business processes. That’s where we’re headed.

The Next Chapter in AI

Reasoning Language Models are not just the next step in AI—they’re the step that transforms AI from a tool into a true collaborator. By bridging the gap between probabilistic generation and structured reasoning, RLMs offer a glimpse of AI’s potential to solve the world’s most complex problems.

Reasoning Language Models: A Blueprint

Link to paper https://arxiv.org/abs/2501.11223

Stay tuned for our own advancements in this space—we believe this is just the beginning.

With Dataception’s cutting-edge approaches, AI truly is just a walk in the park!